UW Interactive Data Lab

papers

Polyjuice: Generating Counterfactuals for Explaining, Evaluating, and Improving Models

Tongshuang (Sherry) Wu, Marco Tulio Ribeiro, Jeffrey Heer, Daniel S. Weld.

Proc. Association for Computational Linguistics (ACL), 2021

Proc. Association for Computational Linguistics (ACL), 2021

Abstract

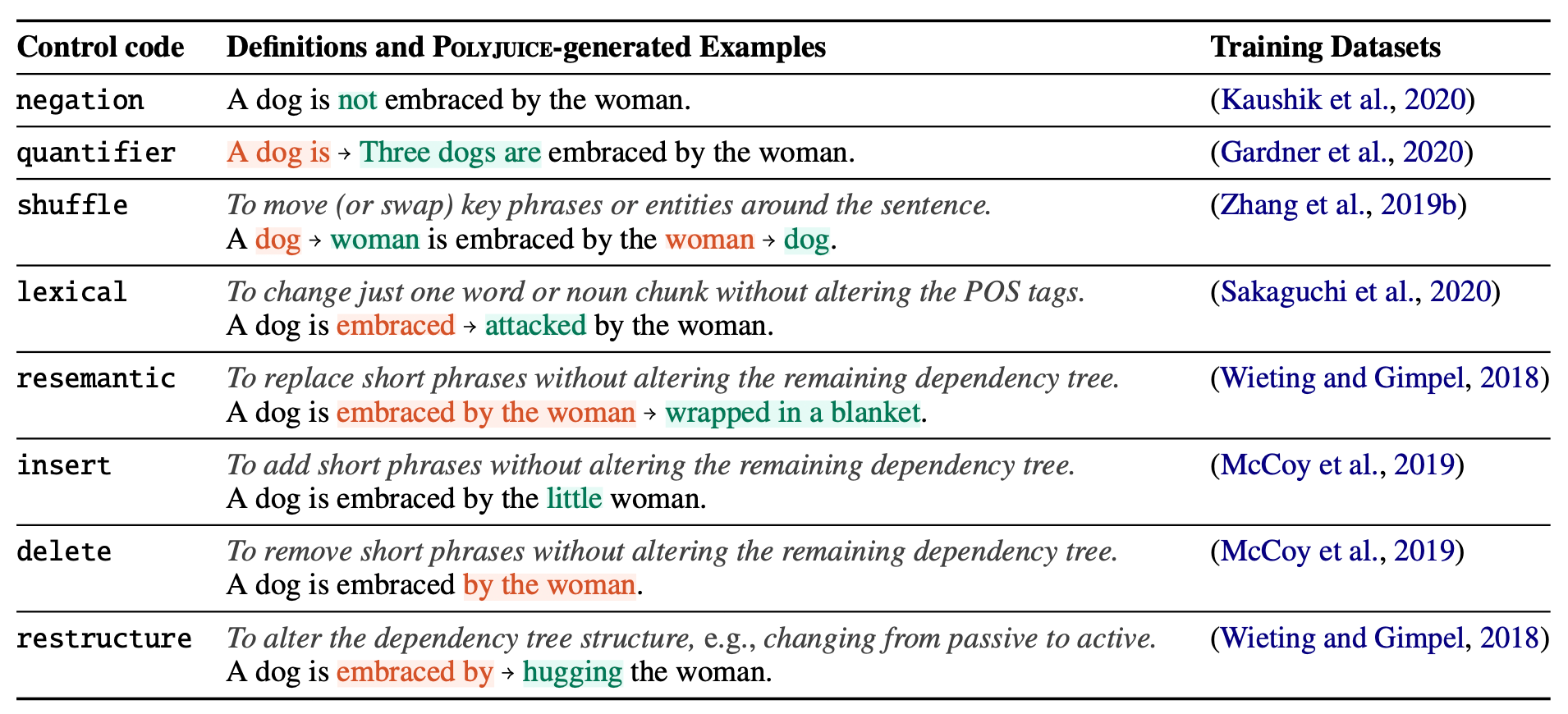

While counterfactual examples are useful for analysis and training of NLP models, current generation methods either rely on manual labor to create very few counterfactuals, or only instantiate limited types of perturbations such as paraphrases or word substitutions. We present Polyjuice, a general-purpose counterfactual generator that allows for control over perturbation types and locations, trained by finetuning GPT-2 on multiple datasets of paired sentences. We show that Polyjuice produces diverse sets of realistic counterfactuals, which in turn are useful in various distinct applications: improving training and evaluation on three different tasks (with around 70% less annotation effort than manual generation), augmenting state-of-the-art explanation techniques, and supporting systematic counterfactual error analysis by revealing behaviors easily missed by human experts.

BibTeX

@inproceedings{2021-polyjuice,

title = {Polyjuice: Generating Counterfactuals for Explaining, Evaluating, and Improving Models},

author = {Wu, Tongshuang AND Ribeiro, Marco AND Heer, Jeffrey AND Weld, Dan},

booktitle = {Proc. Association for Computational Linguistics (ACL)},

year = {2021},

url = {https://uwdata.github.io/uwdata/papers/polyjuice},

doi = {10.18653/v1/2021.acl-long.523}

}